Global data volume is increasing dramatically in the big data era. Numerous technologies are developed, and huge data is also produced during this process. Data storage, security, and mining are becoming more and more crucial. New high-density, durable, and affordable storage techniques must be developed since traditional data storage technology can no longer keep up with the rate of data generation.

One-dimensional data is essentially stored on a two-dimensional surface using traditional data storage techniques. We must consider boosting storage dimensions if we want to overcome the constraints of current technologies.

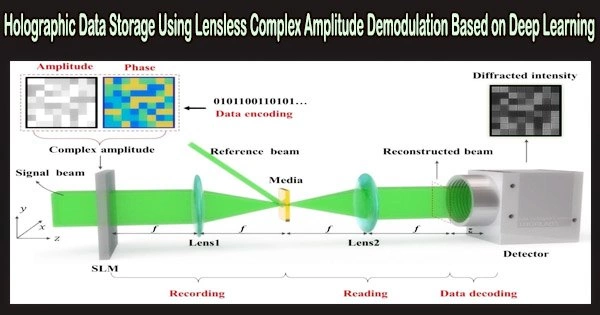

Holographic data storage seems to be a feasible solution. Holograms are recorded in the medium via interference between information light encoding patterns and reference light encoding patterns. When reading, the information light pattern from the hologram is diffracted only by the reference beam.

Holographic data storage technology is a formidable rival for the future generation of storage technology because it possesses the properties of three-dimensional volume storage and two-dimensional data transmission. This allows for better storage densities and quicker data transmission speeds.

Holographic data storage has really been discussed for 60 years, although it has not yet been used in real life. One of the causes is that conventional holographic data storage only employs amplitude modulation, which is incompatible with the theoretical goal of holography and results in storage densities that are far lower than those predicted by theory.

Complex amplitude modulation must be employed for recording and reading in order to fully take advantage of the benefits of holographic data storage. However, because detectors cannot directly retrieve the phase, phase reading represents the technical obstacle.

While classic non-interference systems frequently need repeating calculations, slowing down data transmission speed, interference is an issue since it creates an unstable system that is not feasible.

In a recent article published in Opto-Electronic Advances, researchers suggest that the near-field diffraction intensity image be used to simultaneously and non-iteratively read accurate amplitude and phase information without interference, thereby resolving the main technical problem with complex amplitude modulation holographic data storage.

The basis of this model is the extraction of high-frequency and low-frequency picture characteristics from near-field diffraction patterns, which correspond to modulation of phase and amplitude encoding, using an end-to-end convolutional neural network. A network with generalization capabilities is created based on the neural network’s training in order to accurately forecast new complicated amplitude encoding data.

Essentially, the dimensional loss issue brought on by the inability to identify phase in space is resolved by deep learning by accumulating sufficient redundant feature information in the time dimension. Holographic data storage and deep learning have a good point of integration.

Because the encoding of holographic data storage is adjustable, unlike in other imaging applications, this encoding prior can be utilized to consciously modulate the encoding rules, providing more distinct data samples and improving the effectiveness of deep learning.

A deep learning-based near-field diffraction decoding system was proposed by Prof. Xiaodi Tan’s research team at Fujian Normal University. It can quickly and accurately read both amplitude and phase information without the use of interferometry, which would slow down the process. This system also addresses the main technical challenge of complex amplitude modulation in holographic data storage.

Three crucial factors determine whether holographic data storage and deep learning will be a success. One of the key points is the lensless near-field diffraction intensity detection. In order for the phase changes to be conveyed to the intensity distribution by diffraction, but the amplitude features are still kept, the lensless system and the selection of the near-field distance both work to ensure that the light field has particular diffraction effects.

This correspondence can be found in simulations and experiments within a limited range, and the precise diffraction distance relies on the spatial frequency and complexity of the input encoding, among other things. The near-field diffraction distance should be less, for instance, if the encoding has a high spatial frequency since the diffraction effect is stronger.

Finding the feature variations between amplitude and phase is the second important consideration. To avoid confusion between amplitude and phase, which are both learned from the near-field diffraction intensity map, there must be distinct points.

The low-frequency part’s intensity distribution, the researchers discovered, determines the amplitude learning network, while the high-frequency part’s phase difference pattern determines the phase learning network. This makes it possible to reconstruct amplitude and phase independently without interfering with each other on the same near-field diffraction intensity map.

The third key point is unequal interval encoding. However, in this method, the phase difference combination generated by the uniform interval encoding is completely the same, making it impossible for deep learning to distinguish the corresponding diffraction features. Typically, uniform encoding has a larger encoding interval, which can reduce the encoding reconstruction interference.

Therefore, non-uniform encoding can significantly increase sample variety, enabling the deep learning network to recognize complicated amplitude encoding with accuracy.

This research has implications for various deep learning-based computational imaging domains in addition to the field of holographic data storage. Deep learning’s “black box” has a hazy physical meaning, which restricts its applications.

Holographic data storage allows for the free design of the encoding, giving research aims a head start.

Therefore, by creating an evaluation mechanism for deep learning complex amplitude encoding and decoding, examining the relationship between various encoding conditions and the effectiveness of deep learning decoding, and developing optimization strategies for complex amplitude encoding in holographic data storage, it is possible to determine which encoding rules are more suitable for deep learning decoding.

This can establish the groundwork for revealing the “black box” of deep learning and give structured physical functions to the parameters of the deep neural network structure.