In the broadest investigation yet of deep neural networks trained to perform auditory tasks, researchers discovered that the majority of these models develop internal representations with features similar to those exhibited in the human brain when listening to the same sounds.

Computational models that imitate the structure and operation of the human auditory system could assist researchers in developing better hearing aids, cochlear implants, and brain-machine interfaces. A new study from MIT discovered that modern computer models based on machine learning are getting closer to this goal.

In the broadest investigation yet of deep neural networks trained to perform auditory tasks, the MIT team discovered that the majority of these models develop internal representations with features similar to those exhibited in the human brain when listening to the same sounds.

The study also provides insight into the optimal way to train this type of model. The researchers discovered that models trained on auditory input with background noise better resemble the activation patterns of the human auditory cortex.

“What sets this study apart is it is the most comprehensive comparison of these kinds of models to the auditory system so far. The study suggests that models that are derived from machine learning are a step in the right direction, and it gives us some clues as to what tends to make them better models of the brain,” says Josh McDermott, an associate professor of brain and cognitive sciences at MIT, a member of MIT’s McGovern Institute for Brain Research and Center for Brains, Minds, and Machines, and the senior author of the study.

MIT graduate student Greta Tuckute and Jenelle Feather PhD ’22 are the lead authors of the open-access paper, which appears today in PLOS Biology.

What sets this study apart is it is the most comprehensive comparison of these kinds of models to the auditory system so far. The study suggests that models that are derived from machine learning are a step in the right direction, and it gives us some clues as to what tends to make them better models of the brain.

Josh McDermott

Models of hearing

Deep neural networks are computer models made up of many layers of information-processing units that may be trained on massive amounts of data to accomplish specific functions. This type of model has become widely employed in a variety of applications, and neuroscientists have begun to investigate if similar systems may also be used to represent how the human brain executes specific tasks.

“These models that are built with machine learning are able to mediate behaviors on a scale that really wasn’t possible with previous types of models, and that has led to interest in whether or not the representations in the models might capture things that are happening in the brain,” he adds.

When a neural network is performing a task, its processing units generate activation patterns in response to each audio input it receives, such as a word or other type of sound. Those model representations of the input can be compared to the activation patterns seen in fMRI brain scans of people listening to the same input.

In 2018, McDermott and then-graduate student Alexander Kell reported that when they trained a neural network to perform auditory tasks (such as recognizing words from an audio signal), the internal representations generated by the model resembled those seen in fMRI scans of people listening to the same sounds.

Since then, these types of models have become widely employed, so McDermott’s research group set out to analyze a bigger number of models to see if the capacity to simulate the neuronal representations seen in the human brain is a common feature among them.

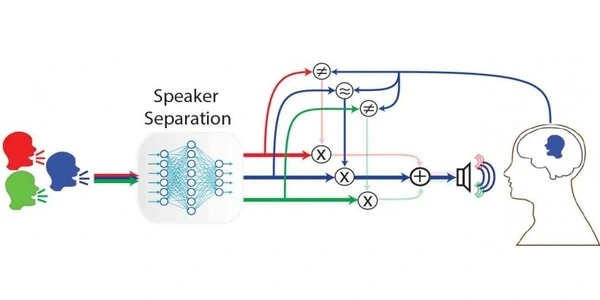

For this study, the researchers analyzed nine publicly available deep neural network models that had been trained to perform auditory tasks, and they also created 14 models of their own, based on two different architectures. Most of these models were trained to perform a single task — recognizing words, identifying the speaker, recognizing environmental sounds, and identifying musical genre — while two of them were trained to perform multiple tasks.

When the researchers presented these models with natural sounds that had been used as stimuli in human fMRI experiments, they found that the internal model representations tended to exhibit similarity with those generated by the human brain. The models whose representations were most similar to those seen in the brain were models that had been trained on more than one task and had been trained on auditory input that included background noise.

“If you train models in noise, they give better brain predictions than if you don’t, which is intuitively reasonable because a lot of real-world hearing involves hearing in noise, and that’s plausibly something the auditory system is adapted to,” Feather says.

Hierarchical processing

The current study also lends credence to the notion that the human auditory cortex is hierarchically organized, with processing separated into stages that support different computing activities. As in the 2018 study, the researchers discovered that representations generated in earlier stages of the model are most closely related to those seen in the primary auditory cortex, whereas representations generated in later model stages are more closely related to those generated in brain regions other than the primary cortex.

Furthermore, the researchers discovered that models trained on diverse tasks performed better at recreating various parts of audition. Models trained on a speech-related task, for example, displayed a stronger resemblance to speech-specific regions.

“Even though the model has seen the exact same training data and the architecture is the same, when you optimize for one particular task, you can see that it selectively explains specific tuning properties in the brain,” said Tuckute.

McDermott’s lab now intends to leverage their discoveries to create models that are even more successful in replicating real brain reactions. Such models could be used to produce better hearing aids, cochlear implants, and brain-machine interfaces, in addition to assisting scientists in learning more about how the brain is organized.

“A goal of our field is to end up with a computer model that can predict brain responses and behavior. We think that if we are successful in reaching that goal, it will open a lot of doors,” McDermott says.