According to the researchers, computer methods widely employed to identify whether a piece was authored by artificial intelligence tend to incorrectly categorize articles written by non-native language speakers as AI-generated. The researchers warn against using such AI text detectors due to their unreliability, which could have detrimental consequences for individuals such as students and job applicants.

Researchers reveal in a peer-reviewed opinion paper published in the journal Patterns that computer methods routinely employed to identify whether a text was authored by artificial intelligence tend to inaccurately designate articles written by non-native language speakers as AI-generated. The researchers warn against using such AI text detectors due to their unreliability, which could have detrimental consequences for individuals such as students and job applicants.

“Our current recommendation is that we be extremely cautious and try to avoid using these detectors as much as possible,” says Stanford University senior author James Zou. “It can have significant consequences if these detectors are used to review things like job applications, college entrance essays, or high school assignments.”

Our current recommendation is that we be extremely cautious and try to avoid using these detectors as much as possible. It can have significant consequences if these detectors are used to review things like job applications, college entrance essays, or high school assignments.

James Zou

OpenAI’s ChatGPT chatbot, for example, can compose essays, answer science and math questions, and generate computer code. Educators around the United States are becoming increasingly concerned about the use of AI in student work, and many have begun utilizing GPT detectors to screen students’ assignments. These detectors are platforms that promise to be able to determine whether text is generated by AI, although their dependability and effectiveness have yet to be shown.

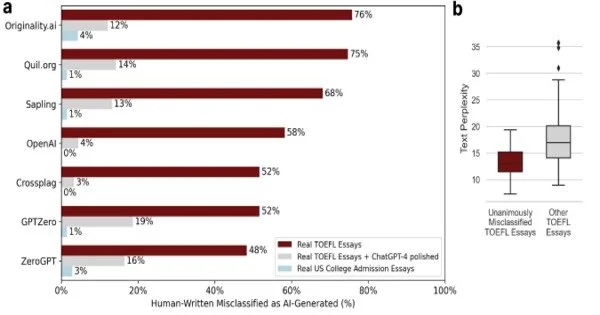

Zou and his colleagues tested seven popular GPT detectors. They ran through the detector’s 91 English essays produced by non-native English speakers for a well-recognized English proficiency test known as the Test of English as a Foreign Language, or TOEFL. More than half of the pieces were mistakenly identified as AI-generated, with one detector identifying approximately 98% of these essays as produced by AI. In comparison, the detectors accurately classified more than 90% of essays produced by eighth-grade pupils in the United States as human-generated.

To mitigate these biases and improve interactions with non-native English speakers, developers can fine-tune models on more diverse datasets that include content from non-native speakers and actively work to reduce biases in both the training data and the model’s responses.

According to Zou, the algorithms of these detectors function by evaluating text perplexity, or how unusual the word choice in an article is. “If you use common English words, the detectors will give you a low perplexity score, which means my essay will most likely be flagged as AI-generated.” “If you use complex and fancy words, the algorithms are more likely to classify it as human-written,” he explains. This is because big language models, such as ChatGPT, are taught to generate text with low perplexity in order to better replicate how an ordinary human speaks, according to Zou.

As a result, non-native English writers who use simpler word choices are more likely to be labeled as utilizing AI.

The team then put the human-written TOEFL essays into ChatGPT and prompted it to edit the text using more sophisticated language, including substituting simple words with complex vocabulary. The GPT detectors tagged these AI-edited essays as human-written.

“We should be very cautious about using any of these detectors in classroom settings,” Zou says, “because there are still a lot of biases, and they’re easy to fool with just the smallest amount of prompt design.” The use of GPT detectors may have repercussions outside the education industry. Search engines like Google, for example, devalue AI-generated material, which may unwittingly stifle non-native English writers.

While AI tools can have a positive impact on student learning, GPT detectors need be improved and tested before being used. According to Zou, one method to improve these detectors is to train them with more diverse sorts of writing.