Intel 8086 (1978)

This chip was skipped over for the original PC, but was used in a few later computers that didn’t amount to much. It was a true 16-bit processor and talked with its cards via a 16 wire data connection. The chip contained 29,000 transistors and 20 address lines that gave it the ability to talk with up to 1 MB of RAM. What is interesting is that the designers of the time never suspected anyone would ever need more than 1 MB of RAM. The chip was available in 5, 6,, 8, and 10 MHz versions.

Intel 8088 (1979)

The 8088 is, for all practical purposes, identical to the 8086. The only difference is that it handles its address lines differently than the 8086. This chip was the one that was chosen for the first IBM PC, and like the 8086, it is able to work with the 8087 math coprocessor chip.

NEC V20 and V30 (1981)

Clones of the 8088 and 8086. They are supposed to be about 30% faster than the Intel ones, though.

Intel 80186 (1980)

The 186 was a popular chip. Many versions have been developed in its history. Buyers could choose from CHMOS or HMOS, 8-bit or 16-bit versions, depending on what they needed. A CHMOS chip could run at twice the clock speed and at one fourth the power of the HMOS chip. In 1990, Intel came out with the Enhanced 186 family. They all shared a common core design. They had a 1-micron core design and ran at about 25MHz at 3 volts. The 80186 contained a high level of integration, with the system controller, interrupt controller, DMA controller and timing circuitry right on the CPU. Despite this, the 186 never found itself in a personal computer.

Intel 80286 (1982)

A 16-bit, 134,000 transistor processor capable of addressing up to 16 MB of RAM. In addition to the increased physical memory support, this chip is able to work with virtual memory, thereby allowing much for expandability. The 286 was the first “real” processor. It introduced the concept of protected mode. This is the ability to multitask, having different programs run separately but at the same time. This ability was not taken advantage of by DOS, but future Operating Systems, such as Windows, could play with this new feature. On the the drawbacks of this ability, though, was that while it could switch from real mode to protected mode (real mode was intended to make it backwards compatible with the 8088’s), it could not switch back to real mode without a warm reboot. This chip was used by IBM in its Advanced Technology PC/AT and was used in a lot of IBM-compatibles. It ran at 8, 10, and 12.5 MHz, but later editions of the chip ran as high as 20 MHz. While these chips are considered paperweights today, they were rather revolutionary for the time period.

Intel 386 (1985 – 1990)

The 386 signified a major increase in technology from Intel. The 386 was a 32-bit processor, meaning its data throughput was immediately twice that of the 286. Containing 275,000 transistors, the 80386DX processor came in 16, 20, 25, and 33 MHz versions. The 32-bit address bus allowed the chip to work with a full 4 GB of RAM and a staggering 64 TB of virtual memory. In addition, the 386 was the first chip to use instruction pipelining, which allows the processor to start working on the next instruction before the previous one is complete. While the chip could run in both real and protected mode (like the 286), it could also run in virtual real mode, allowing several reasl mode sessions to be run at a time. A multi-tasking operating system such as Windows was necessary to do this, though. In 1988, Intel released the 386SX, which was basically a low-fat version of the 386. It used the 16-bit data bus rather than the 32-bit, and it was slower, but it thus used less power and thus enabled Intel to promote the chip into desktops and even portables. In 1990, Intel released the 80386SL, which was basically an 855,00 transistor version of the 386SX processor, with ISA compatibility and power management circuitry.

386 chips were designed to be user friendly. All chips in the family were pin-for-pin compatible and they were binary compatible with the previous 186 chips, meaning that users didn’t have to get new software to use it. Also, the 386 offered power friendly features such as low voltage requirements and System Management Mode (SMM) which could power down various components to save power. Overall, this chip was a big step for chip development. It set the standard that many later chips would follow. It offered a simple design which developers could easily design for.

Intel 486 (1989 – 1994)

The 80486DX was released in 1989. It was a 32-bit processor containing 1.2 million transistors. It had the same memory capacity as the 386 (both were 32-bit) but offered twice the speed at 26.9 million instructions per second (MIPS) at 33 MHz. There are some improvements here, though, beyond just speed. The 486 was the first to have an integrated floating point unit (FPU) to replace the normally separate math coprocessor (not all flavors of the 486 had this, though). It also contained an integrated 8 KB on-die cache. This increases speed by using the instruction pipelining to predict the next instructions and then storing them in the cache. Then, when the processor needs that data, it pulls it out of the cache rather than using the necessary overhead to access the external memory. Also, the 486 came in 5 volt and 3 volt versions, allowing flexibility for desktops and laptops.

The 486 chip was the first processor from Intel that was designed to be upgradeable. Previous processors were not designed this way, so when the processor became obsolete, the entire motherboard needed to be replaced. With the 486, the same CPU socket could accommodate several different flavors of the 486. Initial 486 offerings were designed to be able to be upgraded using “OverDrive” technology. This means you can insert a chip with a faster internal clock into the existing system. Not all 486 systems could use OverDrive, since it takes a certain type of motherboard to support it.

The first member of the 486 family was the i486DX, but in 1991 they released the 486SX and 486DX/50. Both chips were basically the same, except that the 486SX version had the math coprocessor disabled (yes, it was there, just turned off). The 486SX was, of course, slower than its DX cousin, but the resulting reduced cost and power lent itself to faster sales and movement into the laptop market. The 486DX/50 was simply a 50MHz version of the original 486. The DX could not support future OverDrives while the SX processor could.

In 1992, Intel released the next wave of 486’s making use of OverDrive technology. The first models were the i486DX2/50 and i486DX2/66. The extra “2″ in the names indicate that the normal clock speed of the processor is being effectively doubled using OverDrive, so the 486DX2/50 is a 25MHz chip being doubled to 50MHz. The slower base speed allowed the chip to work with existing motherboard designs, but allowed the chip internally to operate at the increased speed, thereby increasing performance.

Also in 1992, Intel put out the 486SL. It was virtually identical to vintage 486 processors, but it contained 1.4 million transistors. The extra innards were used by its internal power management circuitry, optimizing it for mobile use. From there, Intel released various 486 flavors, mixing SL’s with SX’s and DX’s at a variety of clock speeds. By 1994, they were rounding out their continued development of the 486 family with the DX4 Overdrive processors. While you might think these were 4X clock quadruplers, they were actually 3X triplers, allowing a 33 MHz processor to operate internally at 100 MHz.

AM486DX Series (1994 – 1995)

Intel was not the only manufacturer playing in the sandbox at the time. AMD put out its AM486 series in answer to Intel’s counterpart. AMD released the chip in AM486DX4/75, AM486DX4/100, and AM486DX4/120 versions. It contained on-board cache, power management features, 3-volt operation and SMM mode. This made the chip fitting for mobiles in addition to desktops. The chip found its way into many 486-compatibles.

AMD AM5×86 (1995)

This is the chip that put AMD onto the map as official Intel competition. While I am mentioning it here on the 486 page of the history lesson, it was actually AMD’s competitive response to Intel’s Pentium-class processor. Users of the Intel 486 processor, in order to get Pentium-class performance, had to make use of an expensive OverDrive processor or ditch their motherboard in favor of a true Pentium board. AMD saw an opening here, and the AM5×86 was designed to offer Pentium-class performance while operating on a standard 486 motherboard.. They did this by designing the 5×86 to run at 133MHz by clock-quadrupling a 33 MHz chip. This 33 MHz bus allowed it to work on 486 boards. This speed also allowed it to support the 33 MHz PCI bus. The chip also had 16 KB on-die cache. All of this together, and the 5×86 performed better than a Pentium-75. The chip became the de facto upgrade for 486 users who did not want to ditch their 486-based PCs yet.

The Pentium (1993)

By this time, the Intel 486 was entrenched into the market. Also, people were used to the traditional 80×86 naming scheme. Intel was busy working on its next generation of processor. It was not to be called the 80586, though. There were some legal issues surrounding the ability for Intel to trademark the numbers 80586. So, instead, Intel changed the name of the processor to the Pentium, a name they could easily trademark. They released the Pentium in 1993. The original Pentium performed at 60 MHz and 100 MIPS. Also called the “P5″ or “P54″, the chip contained 3.21 million transistors and worked on the 32-bit address bus (same as the 486). It has a 64-bit external data bus which could operate at roughly twice the speed of the 486.

The Pentium family includes the 60/66/75/90/100/120/133/150/166/200 MHz clock speeds. The original 60/66 MHz versions operated on the Socket 4 setup, while all of the remaining versions operated on the Socket 7 boards. Some of the chips (75MHz – 133MHz) could operate on Socket 5 boards as well. Pentium is compatible with all of the older operating systems including DOS, Windows 3.1, Unix, and OS/2. Its superscalar design can execute two instructions per clock cycle. The two separate 8K caches (code cache and data cache) and the pipelined floating point unit increase its performance beyond the x86 chips. It had the SL power management features of the i486SL, but the capability was much improved. It has 273 pins that connect it to the motherboard. Internally, though, its really two 32-bit chips chained together that split the work. The first Pentium chips operated at 5 volts and thus operated rather hotly. Starting at the 100MHz version, the requirement was reduced to 3.3 volts. Starting at the 75MHz version, the chip also supported Symmetric Dual Processing, meaning you could use two Pentiums side by side in the same system.

The Pentium stayed around a long time. It was released in many different speeds as well as different flavors. In fact, Intel implemented an “s-spec” rating which is marked on each Pentium CPU which tells the owner some key data about the processor in order to make sure they have their motherboard set correctly. There were just so many different Pentiums out there that it became hard to tell. You can look up processor specs using the s-spec at the link below.

The Pentium Pro (1995-1999)

If the regular Pentium is an ape, this processor evolved into being human. The Pentium Pro (also called “P6″ or “PPro”) is a RISC chip with a 486 hardware emulator on it, running at 200 MHz or below. Several techniques are used by this chip to produce more performance than its predecessors. Increased speed is achieved by dividing processing into more stages, and more work is done within each clock cycle. Three instructions can be decoded in each clock cycle, as opposed to only two for the Pentium. In addition, instruction decoding and execution are decoupled, meaning that instructions can still be executed if one pipeline stops (such as when one instruction is waiting for data from memory; the Pentium would stop all processing at this point). Instructions are sometimes executed out of order, that is, not necessarily as written down in the program, but rather when information is available, although they won’t be much out of sequence; just enough to make things run smoother. Such improvements to the PPro resulted in a chip optimized for higher end desktop workstations and network servers.

It has two separate 8K L1 cache (one for data and one for instructions), and up to 1 MB of onboard L2 cache in the same package. the onboard L2 cache increased performance in and of itself because the chip did not have to make use of an L2 cache on the motherboard itself. PPro is optimized for 32-bit code, so it will run 16-bit code no faster than a Pentium, which is a big drawback. It’s still a great processor for servers, being it can be in multiprocessor systems with 4 processors. Another good thing about the Pentium Pro is that with the use of a Pentium 2 overdrive processor, you have all the perks of a normal Pentium II, but the L2 cache is full speed, and you get the multiprocessor support of the original Pentium Pro.

Cyrix 6×86 Series (1995)

Cyrix, by this time, was a major player in the alternative processor market. They had been around since 1992, with their release of the 486SLC. By 1995, they had their own 5×86 processor and it was considered the only real competition to the AMD counterpart. But, they released their 6×86 in 1995. It was designed to go head to head with Intel’s Pentium processor. Dubbed “M1″, the chip contained two super-pipelined integer units, an on-die FPU, and 16 KB of write-back cache. It used many of the same techniques internally as the Intel and AMD chips to increase performance. Like AMD beginning with their K5 (see below), Cyrix used the P-rating system. It came in PR-120, 133, 150, 166 and 200 versions. Each rating had a “+” after it, indicating that it performed better than the corresponding Pentium. But, did it?

Cyrix had had a reputation for lagging in the area of performance, and the M1 was not an exception. The chip used a weaker FPU than both AMD and Intel, meaning it could not keep up with the competition in areas such as 3D gaming or other math-intensive software. On top of that, the chip had a reputation for running hot. Users had to get CPU fans that could keep these hot processors cool enough to run stably. Cyrix tried to combat this issue with the 6×86L processor. This “low power” processor made use of a split voltage (3.3 volts for I/O and 2.8 volts internally).

MediaGX (1996)

MediaGX was Cyrix’s answer to low-cost entry level PC’s. Making use of a standard x86 processor core, the chip lowered the cost of PCs using it by integrating many of the common PC components into the chip itself. MediaGX had integrated audio and video circuitry, as well as circuitry to handle many of the common tasks normally handled by chips on the motherboard itself. The CPU spoke directly to a PCI bus and DRAM memory, and the video was rather high-quality SVGA (for the time). It could support up to 128 MB of EDO RAM in 4 separate memory banks, and the video sub-system could support resolutions of up to 1280×1024×8 or 1024×768×16.

The integration of MediaGX was actually spanned across two chips: the processor itself and the MediaGX Cx5510. The chip requires a specially designed motherboard. It is not Socket 7 compatible. As a result, it is really an outsider in relation to the other processors we were discussing, but being that it was on the timetrack of history for CPUs, it bears mentioning.

AMD K5 (1996)

While AMD was competing with Intel with their 5×86 processor, this chip was not a true Pentium alternative. In 1996, however, AMD released the K5. This chip was designed to go head to head with the Pentium processor. It was designed to fit right into Socket 7 motherboards, allowing users to drop K5’s into the motherboards they might have already had. The chip was fully compatible with all x86 software. In order to rate the speed of the chips, AMD devised the P-rating system (or PR rating). This number identified the speed as compared to the true Intel Pentium equivalent. K5’s ran from 75 MHz to 166 MHz (in P-ratings, that is). They contained 24KB of L1 cache and 4.3 million transistors. While the K5’s were nice little chips for what they were, AMD quickly moved on with their release of K6.

Pentium MMX (1997)

Intel released many different flavors of the Pentium processor. One of the more improved flavors was the Pentium MMX, released in 1997. It was a move by Intel to improve the original Pentium and make it better serve the needs in the multimedia and performance department. One of the key enhancements, and where it gets its name from, is the MMX instruction set. The MMX instructions were an extension off the normal instruction set. The 57 additional streamlined instructions helped the processor perform certain key tasks in a streamlined fashion, allowing it to do some tasks with one instruction that it would have taken more regular instructions to do. It paid off, too. The Pentium MMX performed up to 10-20% faster with standard software, and higher with software optimized for the MMX instructions. Many multimedia applications and games that took advantage of MMX performed better, had higher frame rates, etc.

MMX was not the only improvement on the Pentium MMX. The dual 8K caches of the Pentium were doubled to 16 KB each. It also had improved dynamic branch prediction, a pipelined FPU, and an additional instruction pipe to allow faster instruction processing. With these and other improvements, the Pentium line of processor was extended even longer. The line lasted up until recently, and went up to 233 MHz. While new PCs with this processor are all but non-existent, there are many older PCs still using this processor and going strong.

AMD K6 (1997)

The K6 gave AMD a real leg up in performance, and it virtually closed the gap between Intel and AMD in terms of Intel being perceived as the real performance processor. The K6 processor compared, performance-wise, to the new Intel Pentium II’s, but the K6 was still Socket 7 meaning it was still a Pentium alternative. The K6 took on the MMX instruction set developed by Intel, allowing it to go head to head with Pentium MMX. Based on the RISC86 microarchitecture, the K6 contained seven parallel execution engines and two-level branch prediction. It contained 64KB of L1 cache (32KB for data and 32KB for instructions). It made use of SMM power management, leading to mobile version of this chip hitting the market. During its life span, it was released in 166MHz to 300 MHz versions. It gave the early Pentium II’s a run for their money, but AMD had to improve on it in order to keep up with Intel for long.

Cyrix 6×86MX (1997)

Well, Intel came up with MMX and AMD was already using it starting with the K6. So, Cyrix had to get in on the game as well. The 6×86MX, also dubbed “M2″, was Cyrix’s answer. This processor took on the MMX instruction set, as well as took an increased 64KB cache and an increase in speed. The first M2’s were 150 MHz chips, or a P-rating of PR166 (Yes, M2’s also used the P-rating system). The fastest ones operated at 333 MHz, or PR-466.

M2 was the last processor released by Cyrix as a stand-alone company. In 1999, Via Technologies acquired the Cyrix line from it’s parent company, National Semiconductor.

Pentium II (1997)

Intel made some major changes to the processor scene with the release of the Pentium II. They had the PentiumMMX and Pentium Pro’s out into the market in a strong way, and they wanted to bring the best of both into one chip. As a result, the Pentium II is kind of like the child of a Pentium MMX mother and the Pentium Pro Father. But like real life, it doesn’t necessarily combine the best of it’s parents. Pentium II is optimized for 32-bit applications. It also contains the MMX instruction set, which is almost a standard by this time. The chip uses the dynamic execution technology of the Pentium Pro, allowing the processor to predict coming instructions, accelerating work flow. It actually analyzes program instruction and re-orders the schedule of instructions into an order that can be run the quickest. Pentium II has 32KB of L1 cache (16KB each for data and instructions) and has a 512KB of L2 cache on package. The L2 cache runs at ½ the speed of the processor, not at full speed. Nonetheless, the fact that the L2 cache is not on the motherboard, but instead in the chip itself, boosts performance.

One of the most noticeable changes in this processor is the change in the package style. Almost all of the Pentium class processors use the Socket 7 interface to the motherboard. Pentium Pro’s use Socket 8. Pentium II, however, makes use of “Slot 1″. The package-type of the P2 is called Single-Edge contact (SEC). The chip and L2 cache actually reside on a card which attaches to the motherboard via a slot, much like an expansion card. The entire P2 package is surrounded by a plastic cartridge. In addition to Intel’s departure into Slot 1, they also patented the new Slot 1 interface, effectively barring the competition from making competitor chips to use the new Slot 1 motherboards. This move, no doubt, demonstrates why Intel moved away from Socket 7 to begin with – they couldn’t patent it.

The original Pentium II was code-named “Klamath”. It ran at a paltry 66 MHz bus speed and ranged from 233MHz to 300MHz. In 1998, Intel did some slight re-working of the processor and released “Deschutes”. They used a 0.25 micron design technology for this one, and allowed a 100MHz system bus. The L2 cache was still separate from the actual processor core and still ran at only half speed. They would not rectify this issue until the release of the Celeron A and Pentium III. Deschutes ran from 333MHz to up to 450 MHz.

Celeron (1998)

About the time Intel was releasing the improved P2’s (Deschutes), they decided to tackle the entry level market with a stripped down version of the Pentium II, the Celeron. In order to decrease costs, Intel removed the L2 cache from the Pentium II. They also removed the support for dual processors, an ability that the Pentium II had. Additionally, they ditched the plastic cover which the P2 had, leaving simply the processor on the Slot 1 style card. This, no doubt, reduced the cost of the processor quite a bit, but performance suffered noticeably. Removing the L2 cache from a chip seriously hampers its performance. On top of that, the chip was still limited to the 66MHz system bus. As a result, competitor chips at the same clock speeds could still outperform the Celeron. What was the point?

Intel had realized their mistake with the next edition of the Celeron, the Celeron 300A. The 300A came with 128KB of L2 cache on board. The L2 cache was on-die with the 300A, meaning it ran at full processor speed, not half speed like the Pentium II. This fact was great for Intel users, because the Celerons with full speed cache operated much better than the Pentium II’s with 512 KB of cache running at half speed. With this fact, and the fact that Intel unleashed the bus speed of the Celeron, the 300A became well-known in overclocking enthusiast circles. It quickly became known for the cheap chip you could buy and crank up to compete with the more expensive stuff.

The Celeron is available in two formats. The original Celerons used the patented Slot 1 interface. But, Intel later switched over to a PPGA format, or Plastic Pin Grid Array, also known as Socket 370. This new interface allowed reduced costs in manufacturing. It also allowed cheaper conversion from Socket 7 boards to Socket 370. Motherboard manufacturers found it easier to swap out a Socket 7 socket for a Socket 370 socket, more or less leaving the rest of the board the same. It was more involved to change designs over to a slotted board. Slot 1 Celerons ranged from the original 233MHz up to 433 MHz, while Celerons 300MHz and up were available in Socket 370.

me, Via also acquired the Centaur processor division from IDT.

AMD K6-2 & K6-3 (1998)

AMD was a busy little company at the time Intel was playing around with their Pentium II’s and Celerons. In 1998, AMD released the K6-2. The “2″ shows that there are some enhancements made onto the proven K6 core, with higher speeds and higher bus speeds. They probably were also taking a page out of the Pentium “2″ book. The most notable new feature of the K6-2 was the addition of 3DNow technology. Just as Intel created the MMX instruction set to speed multimedia applications, AMD created 3DNow to act as an additional 21 instructions on top of the MMX instruction set. With software designed to use the 3DNow instructions, multimedia applications get even more boost. Using 3DNow, larger L1 cache, on-die L2 cache and Socket 7 usability, the K6-2 gained ranks in the market without too much trouble. When used with Socket 7 boards that contained L2 cache on board, the integrated L2 cache on the processor made the motherboard cache considered L3 cache.

The K6-3 processor was basically a K6-2 with 256 KB of on-die L2 cache. The chip could compete well with the Pentium II and even Pentium III’s of the early variety. In order to eek out the full potential of the processor core, though, AMD fine tuned the limits of the processor, leading the K6-2 and K6-3 to be a bit picky. The split voltage requirements were pretty rigid, and as a result AMD held a list of “approved” boards that could tolerate such fine control over the voltages. Processor cooling was also an important issue with these chips due to the increased heat. In that regard, they were a bit like the Cyrix 6×86MX processors.

Pentium III (1999)

Intel released the Pentium III “Katmai” processor in February of 1999, running at 450 MHz on a 100MHz bus. Katmai introduced the SSE instruction set, which was basically an extension of MMX that again improved the performance on 3D apps designed to use the new ability. Also dubbed MMX2, SSE contained 70 new instructions, with four simultaneous instructions able to be performed simultaneously. This original Pentium III worked off what was a slightly improved P6 core, so the chip was well suited to multimedia applications. The chip saw controversy, though, when Intel decided to include integrated “processor serial number” (PSN) on Katmai. the PSN was designed to be able to be read over a network, even the internet. The idea, as Intel saw it, was to increase the level of security in online transactions. End users saw it differently. They saw it as an invasion of privacy. After taking a hit in the eye from the PR perspective and getting some pressure from their customers, Intel eventually allowed the tag to be turned off in the BIOS. Katmai eventually saw 600 MHz, but Intel quickly moved on to the Coppermine.

In April of 2000, Intel released their Pentium III Coppermine. While Katmai had 512 KB of L2 cache, Coppermine had half that at only 256 KB. But, the cache was located directly on the CPU core rather than on the daughtercard as typified in previous Slot 1 processors. This made the smaller cache an actual non-issue, because performance benefited. Coppermine also took on a 0.18 micron design and the newer Single Edge Contact Cartridge 2 (SECC 2) package. With SECC 2, the surrounding cartridge only covered one side of the package, as opposed to previous slotted processors. What’s more, Intel again saw the logic they had when they took Celeron over to Socket 370, so they eventually released versions of Coppermine in socket format. Coppermine also supported the 133 MHz front side bus. Coppermine proved to be a performance chip and it was and still is used by many PCs. Coppermine eventually saw 1+ GHz.

AMD Athlon (1999 – Present)

With the release of the Athlon processor in 1999, AMD’s status in the high performance realm was placed in concrete. The Athlon line continues to this day, with the highest clock speeds all operating off of various designs and improvements off of the Athlon series. But, the whole line started with the original Athlon classic. The original Athlon came at 500MHz. Designed at a 0.25 micron level, the chip boasted a super-pipelined, superscalar microarchitecture. It contained nine execution pipelines, a super-pipelined FPU and an again-enhanced 3dNow technology. These issues all rolled into one gave Athlon a real performance reputation. One notable feature of the Athlon is the new Slot interface. While Intel could play games by patenting Slot 1, AMD decided to call the bet by developing a Slot of their own – Slot A. Slot A looks just like Slot 1, although they are not electrically compatible. But, the closeness of the two interfaces allowed motherboard manufacturers to more easily manufacturer mainboard PCBs that could be interchangeable. They would not have to re-design an entire board to accommodate either Intel or AMD – they could do both without too much hassle.

Also notable with the release of Athlon was the entirely new system bus. AMD licensed the Alpha EV6 technology from Digital Equipment Corporation. This bus operated at 200MHz, faster than anything Intel was using. The bus had a bandwidth capability of 1.6 GB/s.

Athlon has gone through revisions and improvements and is still being used and marketed. In June of 2000, AMD released the Athlon Thunderbird. This chip came with an improved 0.18 micron design, on-die full speed L2 cache (new for Athlon), DDR RAM support, etc. It is a real workhorse of a chip and has a reputation for being able to be pushed well beyond the speed rating as assigned by AMD. Overclocker’s paradise. Thunderbird was also released in Socket A (or Socket 462) format, so AMD was now returning to its socketed roots just as Intel had already done by this time.

In May 2001, AMD released Athlon “Palomino”, also dubbed the Athlon 4. While the Athlon had now been out for about 2 years, it was now being beaten by Intel’s Pentium IV. The direct competition of the Pentium III was on its way to the museum already, and Athlon needed a boost to keep up with the new contender. The answer was the new Palomino core. The original intention of Palomino was to expand off of the Thunderbird chip, by reducing heat and power consumption. Due to delays, it was delayed and it ended up being beneficial. The chip was released first in notebook computers. AMD-based notebooks, until this time, were still using K6-2’s and K6-3’s and thus AMD’s reputation for performance in the mobile market was lacking. So, Athlon 4 brought AMD to the line again in the mobile market. Athlon 4 was later released to the desktop market, workstations, and multiprocessor servers (with its true dual processor support). Palomino made use of a data pre-fetch cache predictor and a translation look-aside buffer. It also made full use of Intel’s SSE instruction set. The chip made use of AMD’s PowerNow! technology, which had actually been around since the K6-2 and 3 days. It allows the chip to change its voltage requirements and clock speed depending on the usage requirement of the time. This was excellent for making the chip appropriate for power-sensitive apps such as mobile systems.

When AMD released the Palomino to the desktop market in October of 2001, they renamed the chip to Athlon XP, and also took on a slightly different naming jargon. Due to the way Palomino executes instructions, the chip can actually perform more work per clock cycle than the competition, namely Pentium IV. Therefore, the chips actually operate at a slower clock speed than AMD makes apparent in the model numbers. They chose to name the Athlon XP versions based on the speed rating of the processor as determined by AMD and their own benchmarking. So, for example, the Athlon XP 1600+ performs at 1.4 GHz, but the average computer user will think 1.6 GHz, which is what AMD wants. But, this is not to say that AMD is tricking anybody. In fact, these chips to perform like the Thunderbird at the rated speed, and perform quite well when stacked against the Pentium IV. In fact, the Athlon XP 1800+ can out-perform the Pentium IV at 2 GHz. Besides the naming, the XP was basically the same as the mobile Palomino released a few months earlier. It did boast a new packaging style that would help AMD’s release of 0.13 micron design chips later on. It also operated on the 133MHz front-side bus (266MHz when DDR taken into account). AMD continued to use the Palomino core until the release of the Athlon XP 2100+, which was the last Palomino.

In June of 2002, AMD announced the 0.13 micron Thoroughbred-based 2200+ processor. The move was more of a financial one, since there are no real performance gains between Palomino and Thoroughbred. Nonetheless, the smaller more means AMD can product more of them per silicon wafer, and that just makes sense. AMD is really taunting everyone with news of the coming ClawHammer core, which will be AMD’s next big move. But, with that chip still in the development and testing phase at this point, ClawHammer is not yet ready. Until it is, AMD will keep us mildly entertained with Thoroughbred and keep Intel sweating.

Celeron II (2000)

Just as the Pentium III was a Pentium II with SSE and a few added features, the Celeron II is simply a Celeron with a SSE, SSE2, and a few added features. The chip is available from 533 MHz to 1.1 GHz. This chip was basically an enhancement of the original Celeron, and it was released in response to AMD’s coming competition in the low-cost market with the Duron. The PSN of the Pentium III had been disabled in the Celeron II, with Intel stating that the feature was not necessary in the entry-level consumer market. Due to some inefficiencies in the L2 cache and still using the 66MHz bus (unless you overclock), this chip would not hold up too well against the Duron despite being based on the trusted Coppermine core. Celeron II would not be released with true 100 MHz bus support until the 800MHz edition, which was put out at the beginning of 2001.

Intel Processor Generations Summary | ||

Generation | Intel | Approx. |

1st Generation | 8086 (1) | 1980 |

. | 80186 | 1981 |

. | 80286 | 1982 |

2nd Generation | 80286 (2) | 1982 |

3rd Generation | 80386 (3) | 1987 |

4th Generation | 80486 (4) | 1990 |

5th Generation | Pentium (5) | 1993 |

6th Generation | Pentium Pro (6) | 1995 |

. | Pentium II | 1996 |

. | Pent. MMX | 1997 |

. | Celeron | 1998 |

. | Pentium 3 | 1999 |

7th Generation | Pentium 4 (7) | 2000 |

. | Celeron II | 2000 |

. | Duron | 2000 |

8th Generation | Intel Core (8) | 2006 |

Duron (2000 – Current)

In April of 2000, AMD released the Duron “Spitfire”. Spitfire came primarily out of the Athlon Thunderbird lineage, but it had a lighter load of cache onboard, ensuring that it was not a contender in the performance realm with its big cousin. The chip had a 128 KB L1 cache, but only 64 KB of on-die L2. Despite the lower L2 cache, internal methods of dealing with the L2 cache coupled with other improvements make the Duron a clear winner when compared against the Celeron. Duron also works with the EV6 bus while Celeron was still working with 66 MHz bus, and this did not help Celeron at all.

In August of 2001, AMD released the Duron “Morgan”. This chip broke out at 950 MHz but quickly moved past 1 GHz. The Morgan processor core was the key to the improvement of Duron here, and it is comparable to the effect of the Palomino core on the Athlon. In fact, feature-wise, the Morgan core is basically the same as the Palomino core, but with 64 KB of L2 rather than 256 KB.

Pentium IV (2000 – Current)

While we have been talking about AMD’s high-speed Athlon Thunderbirds and Palominos, Intel actually beat AMD to the gun by releasing Pentium IV Willamette in November of 2000. Pentium IV was exactly what Intel needed to again take the torch from AMD. Pentium IV is a truly new CPU architecture and serves as the beginning to new technologies we will see for the next several years. The new NetBurst architecture is designed with future speed increase in mind, meaning P4 is not going to fade away quickly like Pentium III near the 1 GHz mark.

According to Intel, NetBurst is made up of four new technologies: Hyper Pipelined Technology, Rapid Execution Engine, Execution Trace Cache and a 400MHz system bus. Let’s look at the first three, since they require some explanation:

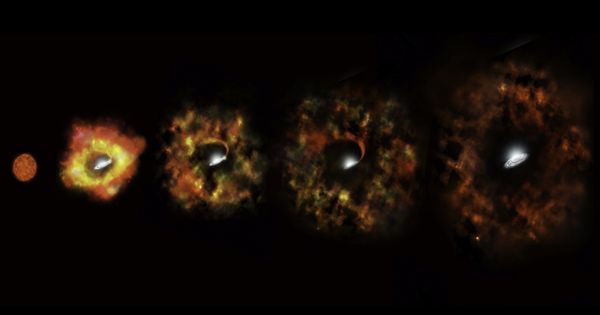

Hyper Pipelined Technology

There are a couple of ways to increase the speed of a processor. One is to decrease the die size. Technology in this regard is developed quickly, but not quickly enough. The P5 core saw its limit quickly and so did the P6 core (which is why Pentium III was limited at around 1 GHz). The technology to move into a smaller die size was not yet ready at the time of the Willamette release, so Intel moved to plan B. Plan B is to change the design of the CPU pipeline so that it is wider, can accommodate more instructions. This is what Intel did. Hyper Pipelined Technology refers to Intel’s expanding of the CPU pipeline from 10 stages (of the P6) to 20 stages. This effectively makes the data pipe (bad term, but descriptive) wider, and allows each stage to do actually less per clock cycle than the P6 core. The fact that each stage actually does less per clock cycle is what gives this design room for expandability. It is analogous to expanding a street highway – you add more lanes and for awhile each lane has less traffic, but eventually traffic increases and the road can handle much more traffic. The tradeoff in simply expanding this pipeline to a bunch of stages is that it takes the processor longer to recover from mistakes in the branch level prediction, being that it has to basically start over with 20 stages rather than a shorter 10-stage pipeline. The P4, though, has a newly advanced branch predictor to help with this problem.

Rapid Execution Engine

The Pentium IV contains 2 arithmetic logic units and they operate at twice the speed of the processor. While this might sound like absolute heaven, it is good to keep in mind that they had to do it this way due to the pipeline design in order to even keep integer performance up to that of the Pentium III. So, this is really a necessary design change due to the increase pipeline size.

Execution Trace Cache

Intel also did some re-working of the P4’s internal cache in order to nullify the effects of a mistake in branch prediction that can be a real lag with a 20-stage pipeline. First, they increase the branch target buffer size to eight times that of the Pentium III. This cache is the area from which the branch predictor gets its data. Secondly, Intel reduced the size of the L1 data cache to only 8K in order to reduce the latency of the cache. This, no doubt, increases the need for the 256 KB on-die L2 cache, and the latency of that has been improved on the P4 as well. Lastly, Intel added a execution trace cache. This is a new cache that can hold instructions that are already decoded and ready for execution. This means that the processor does not have to again waste time decoding every instruction when a branch prediction error occurs. Instead, it can just go to this 12K cache and retrieve the operation and go.

The early Pentium 4’s made use of the Socket 423 interface. One of the reasons for the new interface is the addition of heatsink retention mechanisms to either side of the socket. This is a move to help owners avoid the dreaded mistake of crushing the CPU core by tightening the heatsink down on it too tightly. The retention bases hold the heat sink onto the CPU. Socket 423 was short-lived, and Pentium IV quickly moved to Socket 478 with the release of the 1.9 GHz. Also, P4 was, at its launch, associated exclusively with Rambus RDRAM. Intel was stuck in this agreement with Rambus, and this was an obvious hurdle for promotion, being that most computer users to not have Rambus and don’t wish to buy any. So, early retail P4’s actually came packaged with two 64MB sticks of RDRAM. With chipset support later coming, DDR mating with the Pentium IV eventually came.

Pentium IV’s, as you might expect, were and still are on the expensive end of things. The new core was quite big when compared to other processors and the cost to product it was innately higher. In early 2002, Intel announced a new edition of the Pentium IV based on the Northwood core. The big news with this is that Intel leaves the larger 0.18 Willamette core in favor of this new 0.13 micron Northwood. This shrunk the core and therefore allowed Intel to not only make Pentium IV’s cheaper but also make more of them. The core is still bigger than that of the Athlon XP, but this is explained by the fact that Intel increased the L2 cache from 256 KB to 512 KB for Northwood. This raises the transistor count from 42 million for Willamette to 55 million for Northwood. Northwood was first released in 2 GHz and 2.2 GHz versions, but the new design gives P4 room to move up to 3 GHz quite easily. It was recently released at 2.53 GHz using a 533 MHz front side bus. Other than that, Northwood is architecturally the same as Willamette.

Well, I have brought you through a summarized history of the modern processor. I started with the very first personal computer processor and took you all the way up to the very latest (as of now) processors. I have touched on the key aspects of the history. There have, for sure, been a few wild cards on that history – processors that were a bit of a fluke and never caught on anywhere worth mentioning. Even today, we have Via, the chipset maker, trying to make something out of its acquisition of the otherwise failing IDT and Cyrix. In June of 2000, they released the Via C3 Samuel I, a Socket 370, 0.18 micron chip running between 500 and 750 MHz. The chip suffered from a lack of L2 cache, and it lagged way behind anything else on the market, so it was all but forgotten. Other editions of C3 suffered the same fate, but in early 2002, it received a shot in the arm with a switch to 0.13 micron design and SSE support, but it is still way behind the times in terms of performance and, as a result, it doesn’t get much attention.

This summarized history demonstrates Moore’s law with remarkable accuracy. As defined by Webopedia.com, Moore’s law is:

The observation made in 1965 by Gordon Moore, co-founder of Intel, that the number of transistors per square inch on integrated circuits had doubled every year since the integrated circuit was invented. Moore predicted that this trend would continue for the foreseeable future. In subsequent years, the pace slowed down a bit, but data density has doubled approximately every 18 months, and this is the current definition of Moore’s Law, which Moore himself has blessed. Most experts, including Moore himself, expect Moore’s Law to hold for at least another two decades.

We can easily see that the pace of CPU development picked up remarkably in the last few years. Intel and AMD took turns being in the lead. Intel was no longer the de facto leader of the processor industry. The fierce competition quickly saw us from a few hundred megahertz to well over 2 gigahertz. It drive speeds upwards and price downwards, and today the consumer has a myriad of high-performance choices to choose from without putting a major dent in the old wallet.

The future, no doubt, will be just as full of competition. Intel’s Pentium IV core has a lot of room for expansion, and AMD’s upcoming ClawHammer should prove quite exciting. When this all happens, I’ll have to plan another update to this ever-evolving historical account. Until then…

Intel Pentium Dual-Core

From Wikipedia, the free encyclopedia

The Pentium Dual-Core brand refers to lower-end x86-architecture microprocessors from Intel. They were based on either the 32-bit Yonah or 64-bit Allendale processors (with very different microarchitectures) targeted at mobile or desktop computers respectively.

In 2006, Intel announced a planto return the Pentium brand from retirement to the market, as a moniker of low-cost Core architecture processors based on single-core Conroe-L, but with 1 MB cache. The numbers for those planned Pentiums were similar to the numbers of the latter Pentium Dual-Core CPUs, but with the first digit “1”, instead of “2”, suggesting their single-core functionality. Apparently, a single-core Conroe-L with 1 MB cache was not strong enough to distinguish the planned Pentiums from other planned Celerons, so it was substituted by dual-core CPUs, bringing the “Dual-Core” add-on to the “Pentium” moniker.

The first processors using the brand appeared in notebook computers in early 2007. Those processors, named Pentium T2060, T2080, and T2130, had the 32-bit Pentium M-derived Yonah core, and closely resembled the Core Duo T2050 processor with the exception of having 1 MB L2 cache instead of 2 MB. All three of them had a 533 MHz FSB connecting CPU with memory. Apparently, “Intel developed the Pentium Dual-Core at the request of laptop manufacturers”..

Subsequently, on June 3, 2007, Intel released the desktop Pentium Dual-Core branded processors[5] known as the Pentium E2140 and E2160. A E2180 model was released later in September 2007. These processors support the Intel64 extensions, being based on the newer, 64-bit Allendale core with Core microarchitecture. These closely resembled the Core 2 Duo E4300 processor with the exception of having 1 MB L2 cache instead of 2 MB[7]. Both of them had an 800 MHz FSB. They targeted the budget market above the Intel Celeron (Conroe-L single-core series) processors featuring only 512 kB of L2 cache. Such a step marked a change in the Pentium brand, relegating it to the budget segment rather than its former position as the mainstream/premium brand.

An article on Tom’s Hardware claims that these CPUs are highly overclockable.

Intel Core 2 Duo Family review

The performance results were interesting as some of the synthetic and real world testing showed the E6300 to be significantly slower than the E6700. Despite this, the E6300 was still quite a lot faster than the Pentium D 950, which is impressive given the low-end Core 2 Duo processor is cheaper already. Take the Super PI results for example, the Pentium D 950 took 37 seconds to complete the 1MB calculation, whereas the E6300 took just 26 seconds. That’s a 30% difference in performance. There shouldn’t be a doubt in your mind, Core 2 Duo processors have arrived to take over Pentium Ds as quickly as possible, and while at it try to recover some of the ground lost to AMD.

In some tests there was barely any performance difference between the E6300 and E6400 processors. For an extra $40 you can get the faster processor, which translates into an overall 10% performance gain. Depending on how much you are willing to spend, the upgrade might be hard to justify.

The story was not very different the Core 2 Duo E6600 and E6700 processors, as they were only marginally faster than the 2MB L2 Cache processors. The L2 cache did not seem to make much of a difference in our tests, which certainly surprised me. Rather the frequency appears to be the driving factor for now, as games such as UT2004 scored roughly 20fps more each time the frequency was boosted by roughly ~270MHz. However, games such as Quake 4 and Prey performed much the same on all four Core 2 Duo processors because of the graphics card bottleneck.

Intel’s Core 2 launch lineup is fairly well rounded as you can see from the table below:

CPU | Clock Speed | L2 Cache |

| Intel Core 2 Extreme X6800 | 2.93GHz | 4MB |

| Intel Core 2 Duo E6700 | 2.66GHz | 4MB |

| Intel Core 2 Duo E6600 | 2.40GHz | 4MB |

| Intel Core 2 Duo E6400 | 2.13GHz | 2MB |

| Intel Core 2 Duo E6300 | 1.86GHz | 2MB |

As the name implies, all Core 2 Duo CPUs are dual core as is the Core 2 Extreme. Hyper Threading is not supported on any Core 2 CPU currently on Intel’s roadmaps, although a similar feature may eventually make its debut in later CPUs. All of the CPUs launching today also support Intel’s Virtualization Technology (VT), run on a 1066MHz FSB and are built using 65nm transistors.

The table above features all of the Core 2 processors Intel will be releasing this year. In early next year Intel will also introduce the E4200, which will be a 1.60GHz part with only a 800MHz FSB, a 2MB cache and no VT support. The E4200 will remain a dual core part, as single core Core 2 processors won’t debut until late next year. On the opposite end of the spectrum Intel will also introduce Kentsfield in Q1 next year, which will be a Core 2 Extreme branded quad core CPU from Intel.