According to Joshua Agar, a professor in the Department of Materials Science and Engineering at Lehigh University, understanding structure-property relationships is a crucial goal of materials research. Despite this, due to the intricacy and multidimensional nature of the structure, there is currently no metric for understanding material structure.

Because neural networks can adapt to changing input, they can produce the best possible outcome without requiring the output criteria to be redesigned. The artificial intelligence-based notion of neural networks is quickly gaining traction in the creation of trading systems.

Artificial neural networks, a sort of machine learning, can be trained to recognize similarities and even correlate parameters such as structure and attributes; however, according to Agar, there are two key problems.

The first is that the great majority of data generated by materials experiments is never examined. This is largely due to the fact that such photos, which are created by scientists in laboratories all over the world, are rarely saved in a usable format and are rarely shared with other research groups.

The second issue is that neural networks are ineffective at learning symmetry and periodicity (the degree to which a material’s structure is periodic), two important qualities for materials scientists.

Now, a team lead by Lehigh University has created a revolutionary machine learning approach that uses machine learning to build similarity projections, allowing researchers to search an unstructured image library for the first time and find trends.

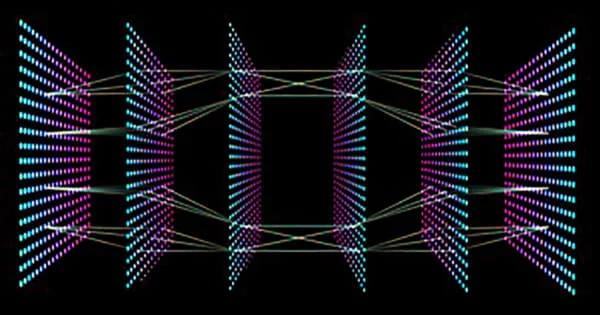

Layers of interconnected nodes make up a neural network. Each node is a perceptron, which works in a similar way to multiple linear regression. The perceptron converts the signal from a multiple linear regression into a nonlinear activation function.

Agar and his colleagues at the University of California, Berkeley, developed and trained a neural network model that included symmetry-aware features, then applied their method to a set of 25,133 piezoresponse force microscopy images collected on a variety of materials systems over the course of five years.

The ultimate result was that they were able to put comparable classes of material together and notice trends, which provided a foundation for understanding structure-property correlations.

“One of the novelties of our work is that we built a special neural network to understand symmetry and we use that as a feature extractor to make it much better at understanding images,” says Agar, a lead author of the paper where the work is described: “Symmetry-Aware Recursive Image Similarity Exploration for Materials Microscopy,” published today in Nature Computational Materials Science.

In addition to Agar, authors include, from Lehigh University: Tri N. M. Nguyen, Yichen Guo, Shuyu Qin, and Kylie S. Frew and, from Stanford University: Ruijuan Xu. Nguyen, a lead author, was an undergraduate at Lehigh University and is now pursuing a Ph.D. at Stanford.

If you train a neural network, the result is a vector or a set of numbers that is a compact descriptor of the features. Those features help classify things so that some similarity is learned.

Joshua Agar

Uniform Manifold Approximation and Projection (UMAP), a non-linear dimensionality reduction technique, was used by the researchers to arrive at projections. This approach, says Agar, allows researchers to learn, “..in a fuzzy way, the topology and the higher-level structure of the data and compress it down into 2D.”

“If you train a neural network, the result is a vector or a set of numbers that is a compact descriptor of the features. Those features help classify things so that some similarity is learned,” says Agar.

“What’s produced is still rather large in space, though, because you might have 512 or more different features. So, then you want to compress it into a space that a human can comprehend such as 2D, or 3D or, maybe, 4D.”

Agar and his team were able to combine highly similar classes of material together using this method, which resulted in a total of 25,000 photographs.

“Similar types of structures in the material are semantically close together and also certain trends can be observed particularly if you apply some metadata filters,” says Agar. “If you start filtering by who did the deposition, who made the material, what were they trying to do, what is the material system…you can really start to refine and get more and more similarity. That similarity can then be linked to other parameters like properties.”

This research shows how better data storage and administration can speed up the discovery of new materials. Images and data created by failed trials, according to Agar, are particularly valuable.

“No one publishes failed results and that’s a big loss because then a few years later someone repeats the same line of experiments,” says Agar. “So, you waste really good resources on an experiment that likely won’t work.”

Rather of losing all of that data, Agar suggests that the data previously acquired may be leveraged to develop new trends that have never been seen before, speeding up discovery tremendously.

This study is the first “use case” of an innovative new data-storage enterprise housed at Oak Ridge National Laboratory called DataFed. DataFed, according to its website is, “..a federated, big-data storage, collaboration, and full-life-cycle management system for computational science and/or data analytics within distributed high-performance computing (HPC) and/or cloud-computing environments.”

“My team at Lehigh has been part of the design and development of DataFed in terms of making it relevant for scientific use cases,” says Agar. “Lehigh is the first live implementation of this fully-scalable system. It’s a federated database so anyone can pop up their own server and be tied to the central facility.”

Agar is a machine learning expert on the Presidential Nano-Human Interface Initiative team at Lehigh University. The interdisciplinary program, which combines social sciences and engineering, aims to change how people engage with scientific instruments in order to speed up invention.

“One of the key goals of Lehigh’s Nano/Human Interface Initiative is to put relevant information at the fingertips of experimentalists to provide actionable information that allows more informed decision-making and accelerates scientific discovery,” says Agar.

“Humans have limited capacity for memory and recollection. DataFed is a modern-day Memex; it provides a memory of scientific information that can easily be found and recalled.”

Researchers working on multidisciplinary team science will find DataFed to be a very useful and beneficial tool, as it allows researchers working on team projects in different/remote places to access each other’s raw data.

“This is one of the key components of our Lehigh Presidential Nano/Human Interface (NHI) Initiative for accelerating scientific discovery,” says Martin P. Harmer, Alcoa Foundation Professor in Lehigh’s Department of Materials Science and Engineering and Director of the Nano/Human Interface Initiative.

The work presented here was funded by the Lehigh University Nano/Human Interface Presidential Initiative and a TRIPODS + X award from the National Science Foundation.